Copilot

Pre-Installation and Deployment Preparations

System Requirements

Hardware Requirements:

- CPU: Intel/AMD 32 cores or higher

- Memory: 256GB RAM or higher

- Storage: At least 200GB available disk space

- GPU: Must support CUDA. Memory greater than 40GB. Recommended to use Ampere architecture or higher (e.g., A100, RTX 4090).

Software Requirements::

- Operating System: Ubuntu 22.04

- CUDA: ≥11.8 recommended (compatible with Ampere architecture)

- NVIDIA Driver: ≥535.x

- Docker: ≥20.10 (requires NVIDIA Container Toolkit must be installed)

AI package

Please contact the salses to get the AI docker image. Save the AI docker image xiangyu-ai.tar to the server.

Installation Steps

Pre-Installation Check:

- NVIDIA Driver & CUDA: Run the command

nvidia-smito verify the Driver Version and CUDA Version meet the requirements specified in Software Requirements

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 560.35.03 Driver Version: 560.35.03 CUDA Version: 12.6 |

|-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA L40 On | 00000000:08:00.0 Off | 0 |

| N/A 73C P0 107W / 300W | 1603MiB / 46068MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

| 1 NVIDIA L40 On | 00000000:09:00.0 Off | 0 |

| N/A 47C P0 84W / 300W | 10817MiB / 46068MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| 0 N/A N/A 7072 C text-embeddings-router 1594MiB |

| 1 N/A N/A 309241 C ...rs/cuda_v12_avx/ollama_llama_server 10808MiB |

+-----------------------------------------------------------------------------------------+

- Docker Environment Check: Run the command

docker --versionto verify that Docker version meets the version specified in Software Requirements. For example:

Docker version 27.4.1, build b9d17ea

- NVIDIA Container Toolkit check: Run the command

nvidia-container-runtime --versionto verify that the NVIDIA Container Toolkit is installed and running. For example:

NVIDIA Container Runtime version 1.17.4

commit: 9b69590c7428470a72f2ae05f826412976af1395

spec: 1.2.0

runc version 1.2.2

commit: v1.2.2-0-g7cb3632

spec: 1.2.0

go: go1.22.9

libseccomp: 2.5.3

AI package installation

-

Download the Xiangyu AI image file to your local machine.

-

Run the following command to load the image into the local Docker library.

docker load -i xiangyu-ai.tar -

Run the following command to load the LLM.

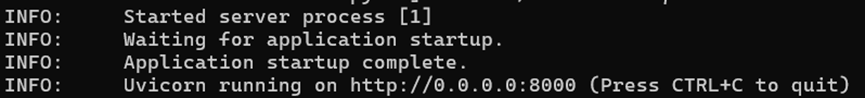

docker run --runtime nvidia --gpus all -p 8000:8000 --ipc=host xiangyu-ai:0.7.2 --model /models --served-model-name Qwen2.5-Coder-14B-InstructWhen the following information appears on the screen, it means the model has been successfully loaded.

Configure Copilot Extension

-

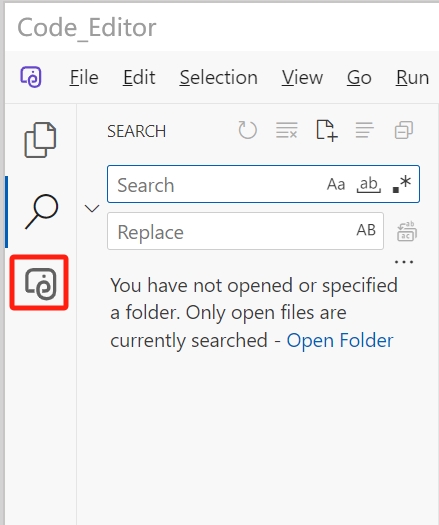

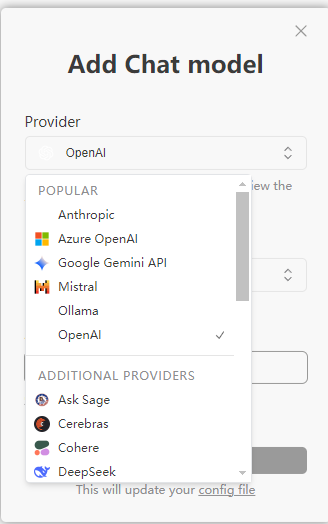

Open the XiangYu AI Code Assistant interface in the host computer software.

-

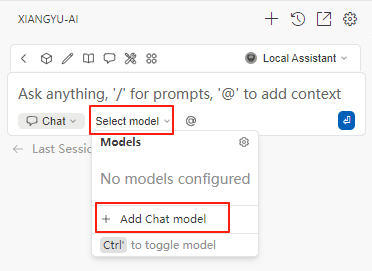

Click the

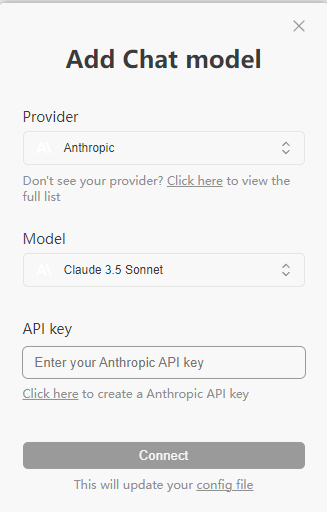

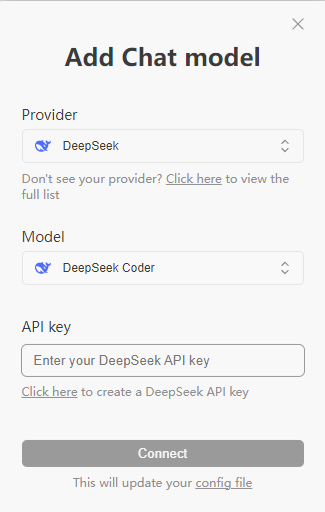

Select modelicon shown in the figure below to configure the AI model access information, then selectAdd Chat model.

-

In the pop-up window, select the appropriate model

-

For users outside of Mainland China, it is recommended to select Anthropic or OpenAI. Choose a suitable model from the list and enter your applied API key.

-

For users in Mainland China, it is recommended to select Deepseek. Choose a suitable model from the list (e.g., Deepseek-coder) and enter your applied API key.

-

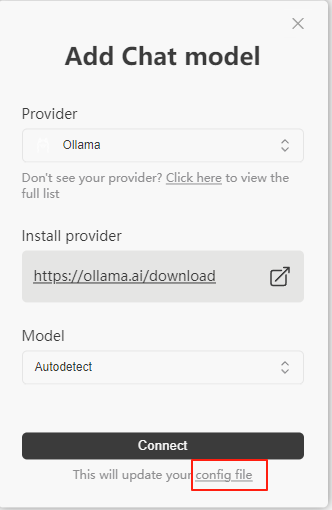

To add a locally deployed model, click the config file link below to open the

config.yamlfile.

-

Configure the AI model access information as specified below. The model

OpenAI/Qwen2.5-Coder-14B-Instructrefers to the configuration example in theAI package installationsection above. If deploying models locally via ollama, refer to the- name: qwen2.5-coder 3bconfiguration example. Fill in appropriate parameters (model name, apiBase, etc.) based on your deployment information.name: Local Assistant

version: 1.0.0

schema: v1

models:

- name: OpenAI/Qwen2.5-Coder-14B-Instruct

provider: openai

model: Qwen2.5-Coder-14B-Instruct

apiBase: http://x.x.x.x:8000/v1

apiKey: test

roles:

- chat

- edit

- apply

- autocomplete

- name: qwen2.5-coder 3b

provider: ollama

model: qwen2.5-coder:3b

apiBase: http://x.x.x.x:11434

roles:

- chat

- edit

- apply

- autocomplete

defaultCompletionOptions:

contextLength: 32768

maxTokens: 20480

context:

- provider: code

- provider: docs

- provider: diff

- provider: terminal

- provider: problems

- provider: folder

- provider: codebase -

In the XIANGYU - AI chat window, ask relevant questions to confirm the model responds correctly.